Soul Zhang Lu Reaches for Human-like AI Conversations

The CEO and founder of Soul App, Zhang Lu, discovered early on that digital communication powered by artificial intelligence could turn her social networking platform into a trailblazer. So, while most social networking apps are now turning to artificial intelligence, Soul took to this path years ago.

From the early exploration of generative AI to a recent technological leap involving an upgraded full-duplex voice call model, Soul Zhang Lu’s team has consistently shown that AI can transform digital connections between humans, and humans and AI. In fact, Soul’s engineers have followed a deliberate strategy to humanize AI. The aim was simple: to redefine digital companionship.

Right after its launch in 2016, Soul quickly embraced AI in the social context, exploring various options to improve user engagement and experience. Because Soul Zhang Lu’s team started with a clear goal of enriching digital socialization through the use of AI, the technology was not treated as a backend tool. Instead, Soul’s engineers used it as a front-line participant in user experiences.

For Soul’s engineers, simply automating the platform’s features was never the primary goal. The team was always after emotional augmentation. At first, Soul App employed AI for intelligent matching-making that would help users find people with common hobbies and interests.

In time, other features such as AI suggestions for conversation starters were also added to the platform. These features were effective, but still modest in their scope. Yet, they managed to create a definite impact because they aligned with Soul Zhang Lu’s vision of creating a social space that felt organic, pressure-free, and above all, was rooted in human emotions.

Fast forward to 2020 and Soul’s engineers had made giant strides towards honing the platform’s AI capabilities. A pivotal moment of this stage was Soul’s official launch of its systematic research and development into AIGC (AI-Generated Content) technologies.

Employing AIGC to improve human connections and human-machine interactions gave the platform a range of impressive capabilities. Soul was now in a league of its own. It was no longer just experimenting with AI; rather, the platform was invested in a technology that had proven potential.

These efforts created pathways that led to more sophisticated models. Because Soul’s AI could accept multimodal input, it naturally gained the ability for distinct human-like perception and interaction. Eventually, these advancements bore fruit in the form of Soul X, the platform’s proprietary large language model.

Launched in 2023, Soul X was nothing short of a game-changer for Soul Zhang Lu’s team. It was the core engine that had everything needed to power Soul’s multimodal capabilities. The development of Soul X allowed the platform to bring out multiple large models.

So, within a year, Soul’s engineering team had models for text generation, music composition, and a voice call model that was designed to replicate natural speech more closely than ever before. The voice model especially garnered immense attention owing to its real-time performance and notably lifelike emotional expression. It could interpret not just what was being said, but also how it was being said.

But, despite its impressive performance, a jarring limitation lingered! The interactions were largely turn-based because the model relied on traditional voice activity detection and sequential dialogue structures. Undoubtedly, the conversation flow was smoother than what other models offered, yet it lacked true spontaneity.

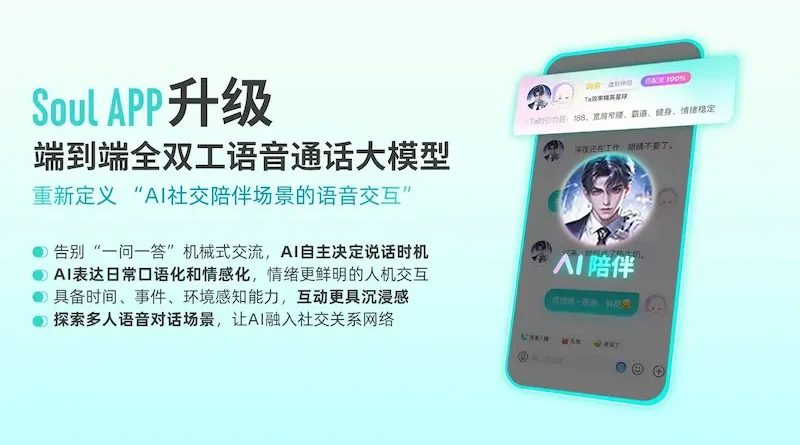

And this limitation is what motivated Soul Zhang Lu’s team to tweak the model further. A full duplex upgrade was recently introduced and it is transformative in terms of both its capabilities and its reach. For starters, the newly enhanced end-to-end full-duplex voice interaction system eliminates the need for outdated VAD (Voice Activity Detection, commonly used to detect speech start/end) mechanisms.

These are replaced by a dynamic, autonomous conversational rhythm. So, the model is no longer bound to the “User speaks and AI responds” loop. This upgrade has made striking changes to what Soul Zhang Lu’s AI can now do. For instance, the model now has the capability to:

- Listen and speak at the same time, much like what humans do.

- Interject when the interaction demands it contextually.

- Fill silences by initiating a dialogue.

- Recognize both temporal and semantic cues.

- Participate in parallel discussions, like in debates or group calls.

These not only make the conversation smoother and faster, but also notably more human-like. In fact, it would be safe to suggest that this upgrade has shifted the paradigm in terms of what is possible in AI-human conversations. Soul Zhang Lu’s model is capable of significant realism when it comes to holding human-like interactions, and this is owed to multiple components. For example:

- The emotional nuance comes courtesy of the model’s ability to not just simulate speech but also to emulate emotions.

- An additional dose of realism is attributed to the variety of vocal tones, which now include contextually relevant responses such as laughter, anger, and sadness. What’s more, these responses are dynamically adjusted to sync with the user’s input.

- The enhanced spoken expressions are a result of realistic verbal tics that include fillers such as “uh”, “like”, brief pauses, and repetitions, which are a part of human speech patterns.

- The model’s ability to perceive time, setting, and conversation flow allows it to understand who it’s talking to, what’s been discussed, and what is likely to happen next.

- The model is also able to respond in a contextually appropriate manner because its autoregressive architecture allows it to consider ambient factors like timing, recent events, and even mood.

When all these components are clubbed together, users get to interact with digital personas that boast believable continuity and memory. The best part is that Soul Zhang Lu’s team is rapidly building on this advancement. Because this new upgrade also gives the model impressive scalability, Soul’s engineers are now busy developing group interaction capabilities, which will allow the model to intelligently participate in multi-person voice calls.

So, it can be concluded that this recent voice model upgrade fits perfectly in the broader picture painted by Soul Zhang Lu that aims to use AI as an active participant in relationship-building, idea exchange, and emotional support, both in one-on-one conversations as well as in group interactions.

Visit the rest of the site for more interesting and useful articles.